Remembering this killer ILM shot from Deep Impact

By Ian Failes

“I was foolishly optimistic and energetic.” – Christopher Horvath

Mimi Leder’s Deep Impact is now 20 years old. The film had a wide array of visual effects work from Industrial Light & Magic – from spacecraft miniatures to digital comets and, perhaps most memorably, a swathe of wave and water simulations. This was still early days in CG water, and ILM used several techniques to achieve different kinds of water shots.

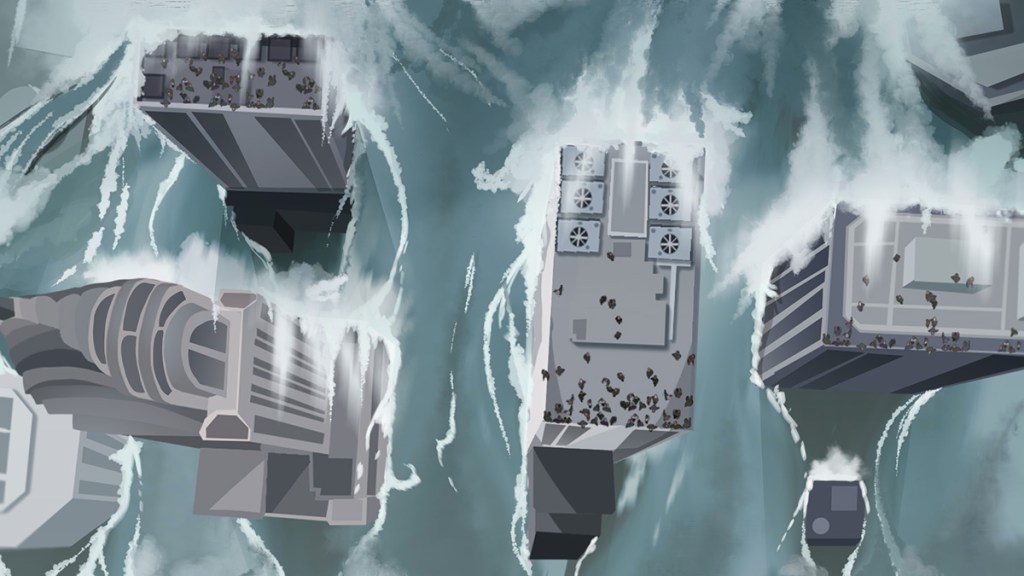

One shot I specifically remembered from the film was an aerial view above some New York City skyscrapers of the water smashing in between the gaps in the buildings. Digital effects artist Christopher Horvath, only very new to ILM at the time and later the co-founder of Tweak Software, was behind the CG simulations of that overhead shot.

For the film’s 20th anniversary, Horvath spoke to vfxblog about ‘jerry-rigging’ the solution for that shot, and his memories of working on Deep Impact, and later founding Tweak.

vfxblog: What are your memories of the requirements of that aerial NYC tidal wave shot, and what steps you took to achieve it?

Christopher Horvath: I joined Deep Impact after being on Snake Eyes, which was my first show at ILM. When I joined, there were only 8 weeks remaining until the project wrapped. The overhead shot (which was called wc9) had been worked on by several other TDs who were exploring ways of achieving the look via texture deformation in shaders, but without much success. I was foolishly optimistic and energetic, and came up with an idea after talking with my office neighbour Jeremy Goldman, which involved creating a voxel grid (I didn’t know it was called that at the time, so I called them a stacked cube array), in which particles would be binned.

Above: watch a clip of the whole sequence. Jump to 1.48 for the aerial shot.

My idea was that if a cube filled up with more particles than it could hold, it would disperse some particles to neighbouring cubes, and this would have the global effect of creating a fluid-like flow. My cube array was very coarse – 64x64x32 – and furthermore I could only accommodate collision objects (buildings) that were aligned wholly with the cube walls and were perfectly aligned to the grid. Fortunately the shot was amenable to these constraints, though in the final render of the simulation there were clear places where the water was separated from the building edges and had to be paint-fixed. All of the paint fixes in the shot were done by the amazing Patrick Jarvis, working in Matador and painting with a mouse.

About two weeks into the shot, with six weeks to go, I encountered the 1996 Foster-Metaxas paper on fluid simulation, which described a Particle-In-Cell voxel grid solution of the Navier Stokes equations for incompressible free-surface flows. I can’t remember how I found the paper, as this was long before we used the internet to readily find resources like this. A few times during those early years at ILM I snuck into the library at Berkeley to copy papers, so that must have been how I found this paper back then. Here’s a link. Nick Foster was at the time working at PDI Dreamworks on creating water simulation for the movie Antz. This paper helped provide a framework and an anchor for my vague and ill-formed particles-in-cubes idea, and I set about attempting to implement it as quickly as possible.

I only had C at the time, not even C++, and I was not as experienced a programmer as I am now. But I look back on those weeks with such fondness – I worked around the clock, and was fairly stressed out. Ben Snow was my CG Supervisor and as the 5 week mark closed in he gently let me know that I had to get something working. By that time I think I had some initial tests to show. Though the simulation was self-contained, it output particles in the Wavefront PDB file format, so that they could be read and played back by Dynamation, which was how we reviewed results. Even playback was a big deal back then – our machines couldn’t play back image sequences at speed, so we had to go to a special room with Abekas disk drives to view our results. The old ILM “DOALL” scripts had three phases – RENDER, COMP, and ABBY, the last of which sent the shot to the Abekas. It’s amazing to me that we got anything done.

The simulation pretty much worked, though it encountered instabilities in most of the simulation attempts – areas where pressure would suddenly increase and cause a ‘pop’, creating a big splash which filled back in with water. The final shot features one or two of these instabilities, which I was unable to get rid of. At the time I thought it was because I had implemented the thing wrong, but in fact it was just that the Successive Over-Relaxation and forward-integrated timestep techniques described in the paper were fundamentally unstable. Ironically, John Anderson, an expert on fluid simulation, was already working at ILM at the time, but I didn’t know him yet and didn’t know that there was someone I could reach out to for help.

The shot was only 67 frames long with handles, it goes by in a flash, so there was a lot of room for error, which was great because there were a LOT of errors. I didn’t really understand the distinction between camera and world space when I wrote the RenderMan shaders for the water surface (which I created as a height field from the particles), so the reflections were terrible. Everything got softened like crazy in the comp, and I think we even ended up doing a re-time of the simulation towards the end of the shot, which leads to a little bit of stuttering in some of the splash particles. Still, I’m so proud of this shot! It was such a long shot, with so little room for error, and I love that we just made it work.

vfxblog: What made that particular shot and the way the waves went through the buildings a particular challenge? There’s also the great view of the people running to the other side of the roofs – how was that achieved?

Christopher Horvath: This reminds me of one of my favourite moments during those early years (for me) at ILM. Deep Impact was a rough show, and towards the end the mood was pretty tense. I remember that somebody threw a chair at somebody else in dailies at some point, and some people had been asked to leave, and a bunch of stuff was behind schedule, overall it was a grim mood.

The people running on top of the buildings was handled by Russell Earl, who has since gone on to be one of ILM’s visual effects supervisors and has been nominated for an Oscar. The people were simulated as particles in Dynamation, and they’re so small they’re barely a pixel big. Especially at ILM’s surprisingly low resolution at the time, which I believe was 1700 pixels horizontally, or thereabouts.

The reason it’s a favourite memory is that every day when Russell presented the people simulation in dailies, he had these elaborate stories about what was happening with everyone on the roof – ‘Look, the woman in the pink sweater stops running because her heel gets stuck in a grating, and that’s why she’s still right in back when the water hits,’ or, ‘This guy, who was really a jerk before, stops to help the woman with the baby, and you can see him shielding them here.’

The whole time Russell was doing this he’d be shuttling the Abby back and forth between frames, and pointing at a simulation of 1-pixel wide dots. And everyone took it with a complete deadpan. Scott Farrar was the supervisor for these shots and he acted like it was totally serious, making comments like, ‘Good work, excellent attention to detail.’ It was like everyone silently agreed to do this weird deadpan comedy routine in the middle of a super stressful project, I absolutely loved it. If we’re not having fun, why bother killing ourselves on these things, as we do?

vfxblog: Later, you co-founded Tweak, and I remember people telling me the water sims that were done at Tweak were so fast, reliable and realistic – what was it that made those things possible do you think?

Christopher Horvath: I think our simulation engines were successful for a number of reasons. Firstly, just having Jim Hourihan, the original author of Dynamation and one of the architects of ILM’s Zeno, meant that our architecture was second-to-none. We were able to build systems very quickly to solve specific problems, and Jim knew about performance design considerations that I feel like our industry has only recently caught up with – cache-coherent, data-centric design, structs-of-arrays instead of arrays-of-structs – all of these critical considerations are all things that came from Jim.

Above: A clip from The Day After Tomorrow. Tweak handled the aerial views of New York as the waves flood in.

Secondly – we tended to rely on our comfort as software builders to allow us to only solve the problems that we had. We resisted the temptation to build a big generic system for handling everything. Instead, we built custom engines on almost a per-shot basis, and I think this really helped.

Lastly… I think we had the benefit of focus, and having worked on a single thing with passion and intensity for a very long period of time, through multiple iterations. We just did water, and in general simulations, over and over and over.

Illustration by Maddi Becke.

Go back to vfxblog.com